In today’s data-driven world, businesses and organizations need robust solutions to process and analyze vast amounts of data. That’s where Apache Hadoop: The Leading Open Source Big Data Platform comes into play. In this article, we’ll dive deep into the world of Apache Hadoop, discuss its architecture, components, benefits, and real-world applications. We’ll also answer some frequently asked questions to give you a better understanding of this powerful big data platform.

Apache Hadoop: The Leading Open Source Big Data Platform

What is Apache Hadoop?

Apache Hadoop is an open-source software framework that facilitates the storage and processing of large datasets across distributed computing environments. The platform is designed to scale up from a single server to thousands of machines, offering high availability and reliability.

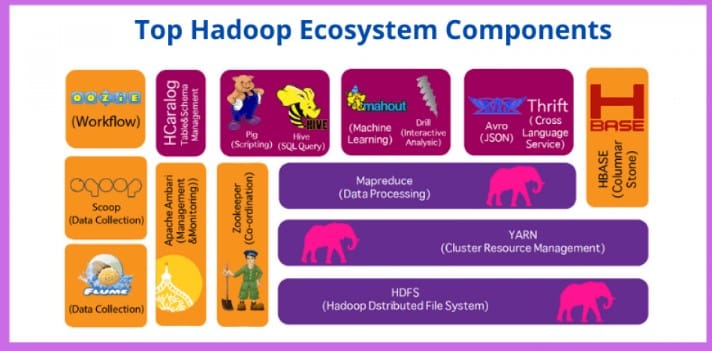

The Hadoop Ecosystem

The Apache Hadoop ecosystem consists of several components, including:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across multiple nodes, providing fault tolerance and high throughput.

- MapReduce: A programming model and execution framework for processing large datasets in parallel.

- YARN (Yet Another Resource Negotiator): A resource management and job scheduling system for Hadoop clusters.

- Hadoop Common: A set of shared utilities and libraries used by other Hadoop modules.

HDFS: Scalable Storage Solution

HDFS is the backbone of the Hadoop ecosystem, providing a distributed and scalable storage solution for massive datasets. Data is stored in blocks across multiple nodes, allowing for high availability and fault tolerance.

MapReduce: Parallel Data Processing

MapReduce is the core data processing engine in Apache Hadoop. It allows developers to write programs that can process vast amounts of data in parallel across a large number of nodes.

Benefits of Apache Hadoop

Some of the key benefits of using Apache Hadoop include:

- Scalability: Hadoop can handle massive amounts of data by distributing it across multiple nodes, ensuring high availability and fault tolerance.

- Cost-effectiveness: As an open-source platform, Hadoop is a cost-effective solution for processing and analyzing big data.

- Flexibility: Hadoop supports various data types and formats, making it a versatile platform for diverse data processing needs.

Real-World Applications of Apache Hadoop

Apache Hadoop has been adopted by numerous organizations across various industries. Here are a few examples of its real-world applications:

Financial Services

In the financial services sector, Apache Hadoop is used for fraud detection, risk management, and customer analytics. By processing large datasets in real-time, financial institutions can make better-informed decisions and mitigate risks.

Healthcare

Hadoop plays a crucial role in analyzing medical data, including electronic health records, medical images, and genomic data. It enables healthcare organizations to gain insights into patient outcomes, optimize treatments, and improve patient care.

Retail

Retailers use Hadoop to analyze customer data, such as purchase history, preferences, and demographics, to deliver personalized marketing campaigns and improve customer satisfaction.

Telecommunications

In the telecommunications industry, Hadoop is used to process and analyze massive amounts of call detail records (CDRs), enabling companies to optimize network performance and enhance customer experience.

Apache Hadoop: Frequently Asked Questions

- What is the difference between Hadoop and traditional databases?

Traditional databases use a structured query language (SQL) to access and manipulate data, while Hadoop leverages a distributed file system (HDFS) and parallel processing (MapReduce) to handle massive datasets. Hadoop is more suitable for processing unstructured and semi-structured data, offering greater flexibility and scalability compared to traditional databases.

- How does Apache Hadoop ensure data reliability?

Hadoop achieves data reliability through its HDFS component, which replicates data blocks across multiple nodes. This replication ensures high availability and fault tolerance, safeguarding data against potential hardware failures.

- Is Apache Hadoop suitable for real-time data processing?

While Hadoop is primarily designed for batch processing, it can be integrated with real-time data processing frameworks like Apache Kafka and Apache Storm to support real-time data processing and analytics.

- What programming languages can be used with Hadoop?

Hadoop primarily supports Java, but other programming languages like Python, Ruby, and R can also be used in conjunction with Hadoop via third-party libraries and APIs.

- What are some alternatives to Apache Hadoop?

There are several alternatives to Hadoop for big data processing, including Apache Spark, Apache Flink, and Google Cloud Dataflow. Each of these platforms has its unique features and use cases, depending on the specific requirements of your project.

- Can I run Hadoop on a single machine?

Yes, Hadoop can be run in a single-node setup, which is useful for development and testing purposes. However, for production environments and large-scale data processing, a multi-node cluster is recommended.

Conclusion

In conclusion, Apache Hadoop: The Leading Open Source Big Data Platform has revolutionized the way businesses and organizations process and analyze massive datasets. With its scalable, cost-effective, and flexible architecture, Hadoop has become the go-to solution for big data processing across various industries. By understanding the components and benefits of Apache Hadoop, you can harness the power of this open-source platform to derive valuable insights from your data.

Read More :