Welcome to the world of Spark: Lightning-Fast Big Data Analytics and Processing. In the era of information explosion, businesses need cutting-edge tools to analyze and process massive amounts of data in real-time. That’s where Apache Spark steps in, offering lightning-fast big data analytics and processing capabilities. In this comprehensive guide, we’ll take you through the ins and outs of Spark, its benefits, how it works, and more.

Spark: Lightning-Fast Big Data Analytics and Processing

What is Apache Spark?

Apache Spark is an open-source, distributed computing system designed for fast and scalable big data processing. It has quickly become one of the most popular big data frameworks, providing advanced capabilities for data processing, analytics, machine learning, and graph processing.

The Origins of Spark

Spark was originally developed in 2009 at the University of California, Berkeley’s AMPLab. It was later donated to the Apache Software Foundation in 2013 and has since become a top-level Apache project.

Benefits of Using Spark

Speed and Performance

Spark’s lightning-fast processing capabilities set it apart from other big data frameworks. By leveraging in-memory computing and advanced optimization techniques, Spark can process data up to 100 times faster than Hadoop’s MapReduce.

Ease of Use

Spark offers high-level APIs in various programming languages, such as Scala, Java, Python, and R, making it accessible to a wide range of developers. Its user-friendly APIs make it easier to develop and deploy big data applications.

Flexibility and Scalability

Spark is highly versatile and can be used for batch processing, interactive queries, streaming, machine learning, and graph processing. It can also scale from a single laptop to thousands of servers, making it suitable for businesses of all sizes.

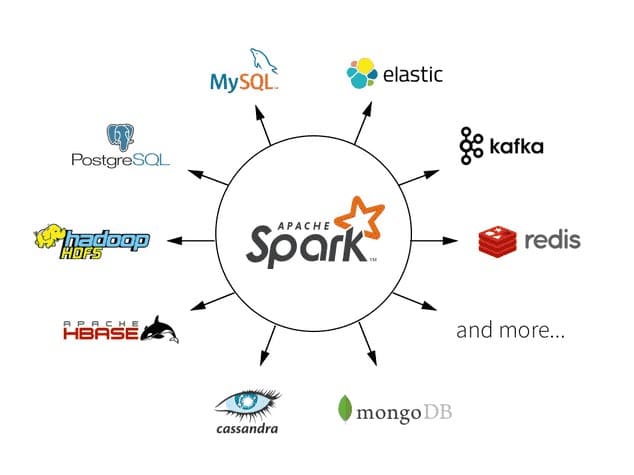

Integration with Other Tools

Spark can easily integrate with various big data ecosystems, such as Hadoop, Hive, HBase, and more. This allows businesses to leverage their existing infrastructure and tools while taking advantage of Spark’s superior capabilities.

Spark’s Core Components

Spark Core

The foundation of the Spark framework, Spark Core provides essential functionalities such as task scheduling, memory management, and fault recovery.

Spark SQL

Spark SQL enables users to query structured data using SQL, as well as integrate with the popular Hive data warehouse.

Spark Streaming

This component allows real-time data processing by ingesting data streams and processing them on the fly.

MLlib

MLlib is Spark’s built-in library for machine learning algorithms, offering scalable and efficient implementations of various ML algorithms.

GraphX

GraphX is a library for graph processing and computation, allowing users to process and analyze graph data with ease.

Getting Started with Spark

Installation

Spark can be easily installed on Linux, macOS, or Windows. Follow the official installation guide to get started.

Running Spark Applications

Spark applications can be developed using Scala, Java, Python, or R. You can submit Spark applications using the spark-submit command, which launches the application on the specified cluster.

Use Cases of Spark

Data Processing and Analytics

Spark excels at processing and analyzing large volumes of data quickly, making it ideal for big data analytics, data warehousing, and business intelligence applications.

Real-Time Data Processing

Thanks to Spark Streaming, businesses can process real-time data streams and gain valuable insights on the go, enabling them to make data-driven decisions faster.

Machine Learning and AI

Spark’s MLlib library provides a robust set of machine learning algorithms that can be easily implemented for various AI applications, such as recommendation systems, fraud detection, and predictive analytics.

Graph Processing

GraphX allows businesses to analyze and process graph data to uncover hidden patterns, relationships, and insights, making it suitable for social network analysis, network optimization, and more.

Best Practices for Spark

Data Partitioning

To optimize performance, it’s crucial to partition your data correctly. Proper data partitioning ensures an even distribution of data across the nodes, reducing data shuffling and improving overall processing speed.

Caching Data

Leveraging Spark’s in-memory caching capabilities can significantly speed up iterative algorithms and machine learning applications. Cache the data you’ll be reusing frequently to minimize the need for recomputation.

Tuning Spark Configuration

Fine-tuning Spark’s configuration settings, such as memory allocation, parallelism, and garbage collection, can optimize performance for your specific use case.

Frequently Asked Questions

1. How does Spark differ from Hadoop?

While both Spark and Hadoop are big data frameworks, Spark offers significant advantages in terms of speed, ease of use, and flexibility. Spark’s in-memory computing and advanced optimization techniques allow it to process data much faster than Hadoop’s MapReduce.

2. What are the programming languages supported by Spark?

Spark supports various programming languages, including Scala, Java, Python, and R. This enables developers with different skill sets to work with Spark easily.

3. Can Spark run on a Hadoop cluster?

Yes, Spark can be easily integrated with Hadoop and can run on Hadoop’s YARN cluster manager. This allows businesses to leverage their existing Hadoop infrastructure while taking advantage of Spark’s superior capabilities.

4. Is Spark suitable for real-time data processing?

Absolutely! Spark Streaming enables real-time data processing by ingesting data streams and processing them on the fly, making it suitable for applications that require real-time insights and decision-making.

5. How do I optimize Spark performance?

Optimizing Spark performance involves several factors, such as proper data partitioning, caching data, and tuning configuration settings. Following best practices and fine-tuning these factors can significantly improve your Spark application’s performance.

6. What is the cost of using Spark?

Apache Spark is an open-source project, meaning it’s free to use. However, running Spark on a cluster or cloud infrastructure will incur costs associated with hardware, cloud services, and support.

Conclusion

Spark: Lightning-Fast Big Data Analytics and Processing is revolutionizing the way businesses handle and analyze data. Its superior speed, ease of use, and flexibility make it a top choice for big data processing and analytics applications. By harnessing the power of Spark, businesses can unlock valuable insights, drive data-driven decision-making, and stay ahead in today’s competitive landscape.

Read More :